这是一个Python计算机视觉图像识别的assignment代写

Hand in: A report about your experiments (see assignment 1 for content and description). Electronic

copies of your ECJ code and data. Use Excel (or similar app) to create performance graphs, to be

included in your report.

System: Any GP system of your choice. You will need to do image file I/O.

Overview: There are 2 possible problems you can select from: (a) Computer vision; or (b) Evo-Art

and procedural textures. Please do only one!

A. Computer Vision

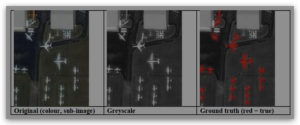

You are going to use GP to perform computer vision. The problem is to identify specific features in

images. For example, consider this photograph of a meteor shower:

The task is to train a GP to identify the image pixels that correspond to meteor trails. To do this, you

need to have a ground truth image, which is a hand-generated image that shows the pixels where

meteors are located (in red).

In this case, you use it as a “solution” for obtaining training data, in order to evolve a genetic program

that identifies meteor locations. You then execute the GP tree over a pixel in the image. If a pixel

resides over a meteor trail, the GP program should generate TRUE (or a value ≥ 0.0). Otherwise it

generates FALSE (value < 0.0). Ideally, it would produce exactly the true/false information residing

in the ground truth image. In a sense, the perfect GP program would be a “meteor filter”.

Another example: identifying planes parked at an airport:

The above image is from Google Maps (satellite), which is a great source of different image data to

use. You could have the GP identify other features from images (cars, buildings, trees, boats in water,

roads, etc.).

To make a ground truth image, you need to carefully hand-paint the pixels that classify what you

want the GP program to identify as “true”. Your GP training should use a selection of positive (red)

and negative pixels. Note that you will almost never get close to 100% correct accuracy, since some

pixels will be tricky to classify. Edges around the red pixels are usually challenging, especially

considering that the painted ground truth image will have errors and noise. For testing data, you can

use the pixels that were not used for training. Alternatively, you might test the GP on another image

(with similar photographic characteristics – colours, scale, etc.).

Your program will be called by “wrapper code”, which will apply your GP program on all the pixels

of an image one-by-one. Hopefully, your program will correctly detect most positive pixels. These are

called true positives. Pixels that are correctly identified as not the object are true negatives.

Sometimes errors may occur. Pixels falsely identified as objects are false positives, while object

pixels that are missed are false negatives. Hence your fitness function will simply tally the overall

percentage of correctly identified pixels. But when computing a fitness value, the fitness should be

normalized with respect to total true positives and true negatives; otherwise, it may benefit a GP to

simply identify everything as “false”, because the majority of area of an image, such as seen in the

ones above, may not have aircraft or meteors! You should also dump out the true/false

positive/negative scores on your testing image at the end of a run (more on this later).

Image data: For training and testing, you need to read in the source and ground truth images. You

can use a B&W image. (Colour are fine too, but will require a bit more work in the GP language; you

might therefore convert the colour image to greyscale). The training process might presume that any

portion of the image that is not painted over represents pixels that are to be classified as false. Note

that using the entire image for fitness evaluation is excessive and very time consuming. A

recommended strategy is to use K pixels residing on red areas as positive examples, and L pixels on

non-red as negative examples, where K and L are user parameters. You can search for them

randomly, or pre-select them by hand (which is more work to do, but you might be able to select a

smaller and more effective training set).